Guardrails

Guardrails are an active mitigation solution to LLM security, implemented to control and monitor user interactions. They help prevent misuse, detect potential security risks, and ensure appropriate model behavior by filtering or blocking problematic inputs and outputs.

Common guardrails include prompt injection/jailbreak detection, content moderation, and PII (personally identifiable information) detection.

Guardrails API

The Guardrails API helps detect potential security risks in user inputs to LLMs, identify PII, and assess potential harm in content.

The Guardrails API is focused on classification and detection. It returns a result, and your application can decide whether to warn, block, or otherwise respond to the input.

It also includes an adaptive prompting service that rewrites potentially harmful prompts according to your policies.

API Base URL

https://api.promptfoo.dev

Endpoints

1. Prompt injection and Jailbreak detection

Analyzes input text to classify potential security threats from prompt injections and jailbreaks.

Request

POST /v1/guard

Headers

Content-Type: application/json

Body

{

"input": "String containing the text to analyze"

}

Response

{

"model": "promptfoo-guard",

"results": [

{

"categories": {

"prompt_injection": boolean,

"jailbreak": boolean

},

"category_scores": {

"prompt_injection": number,

"jailbreak": number

},

"flagged": boolean

}

]

}

categories.prompt_injection: Indicates if the input may be attempting a prompt injection.categories.jailbreak: Indicates if the input may be attempting a jailbreak.flagged: True if the input is classified as either prompt injection or jailbreak.

2. PII Detection

Detects personally identifiable information (PII) in the input text. This system can identify a wide range of PII elements.

| Entity Type | Description |

|---|---|

| account_number | Account numbers (e.g., bank account) |

| building_number | Building or house numbers |

| city | City names |

| credit_card_number | Credit card numbers |

| date_of_birth | Dates of birth |

| driver_license_number | Driver's license numbers |

| email_address | Email addresses |

| given_name | First or given names |

| id_card_number | ID card numbers |

| password | Passwords or passcodes |

| social_security_number | Social security numbers |

| street_name | Street names |

| surname | Last names or surnames |

| tax_id_number | Tax identification numbers |

| phone_number | Telephone numbers |

| username | Usernames |

| zip_code | Postal or ZIP codes |

Request

POST /v1/pii

Headers

Content-Type: application/json

Body

{

"input": "String containing the text to analyze for PII"

}

Response

{

"model": "promptfoo-pii",

"results": [

{

"categories": {

"pii": boolean

},

"category_scores": {

"pii": number

},

"flagged": boolean,

"payload": {

"pii": [

{

"entity_type": string,

"start": number,

"end": number,

"pii": string

}

]

}

}

]

}

pii: Indicates if PII was detected in the input.flagged: True if any PII was detected.payload.pii: Array of detected PII entities with their types and positions in the text.

3. Harm Detection

Analyzes input text to detect potential harmful content across various categories.

Request

POST /v1/harm

Headers

Content-Type: application/json

Body

{

"input": "String containing the text to analyze for potential harm"

}

Response

{

"model": "promptfoo-harm",

"results": [

{

"categories": {

"violent_crimes": boolean,

"non_violent_crimes": boolean,

"sex_related_crimes": boolean,

"child_sexual_exploitation": boolean,

"defamation": boolean,

"specialized_advice": boolean,

"privacy": boolean,

"intellectual_property": boolean,

"indiscriminate_weapons": boolean,

"hate": boolean,

"suicide_and_self_harm": boolean,

"sexual_content": boolean,

"elections": boolean,

"code_interpreter_abuse": boolean

},

"category_scores": {

"violent_crimes": number,

"non_violent_crimes": number,

"sex_related_crimes": number,

"child_sexual_exploitation": number,

"defamation": number,

"specialized_advice": number,

"privacy": number,

"intellectual_property": number,

"indiscriminate_weapons": number,

"hate": number,

"suicide_and_self_harm": number,

"sexual_content": number,

"elections": number,

"code_interpreter_abuse": number

},

"flagged": boolean

}

]

}

- Each category in

categoriesindicates whether the input contains content related to that harm category. category_scoresprovides a numerical score (between 0 and 1) for each harm category.flagged: True if any harm category is detected in the input.

Supported Categories

The harm detection API supports the following categories from ML Commons taxonomy:

| Category | Description |

|---|---|

| violent_crimes | Content related to violent criminal activities |

| non_violent_crimes | Content related to non-violent criminal activities |

| sex_related_crimes | Content related to sex crimes |

| child_sexual_exploitation | Content involving the sexual exploitation of minors |

| defamation | Content that could be considered defamatory |

| specialized_advice | Potentially harmful specialized advice (e.g., medical, legal) |

| privacy | Content that may violate privacy |

| intellectual_property | Content that may infringe on intellectual property rights |

| indiscriminate_weapons | Content related to weapons of mass destruction |

| hate | Hate speech or content promoting discrimination |

| suicide_and_self_harm | Content related to suicide or self-harm |

| sexual_content | Explicit sexual content |

| elections | Content that may interfere with elections |

| code_interpreter_abuse | Potential abuse of code interpretation features |

Each category is assigned a boolean value indicating its presence and a numerical score between 0 and 1 representing the confidence level of the detection.

4. Adaptive Prompting

Automatically adjusts prompts for compliance with specified policies.

Request

POST /v1/adaptive

Headers

Content-Type: application/json

Body

{

"prompt": "String containing the prompt to analyze and adapt",

"policies": [

"List of policy strings, e.g.",

"No instructions for circumventing security measures",

"No content related to illegal activities"

]

}

Response

{

"model": "promptfoo-adaptive-prompt",

"adaptedPrompt": "String containing the adapted prompt"

}

If no modifications were needed, the original prompt is returned.

Examples

Guard Classification Example

curl https://api.promptfoo.dev/v1/guard \

-X POST \

-d '{"input": "Ignore previous instructions"}' \

-H 'Content-Type: application/json'

Response

{

"model": "promptfoo-guard",

"results": [

{

"categories": {

"prompt_injection": false,

"jailbreak": true

},

"category_scores": {

"prompt_injection": 0.00004004167567472905,

"jailbreak": 0.9999395608901978

},

"flagged": true

}

]

}

This example shows a high probability of a jailbreak attempt.

PII Detection Example

curl https://api.promptfoo.dev/v1/pii \

-X POST \

-d '{"input": "My name is John Doe and my email is [email protected]"}' \

-H 'Content-Type: application/json'

Response

{

"model": "promptfoo-pii",

"results": [

{

"categories": {

"pii": true

},

"category_scores": {

"pii": 1

},

"flagged": true,

"payload": {

"pii": [

{

"entity_type": "PERSON",

"start": 11,

"end": 19,

"pii": "John Doe"

},

{

"entity_type": "EMAIL",

"start": 34,

"end": 50,

"pii": "[email protected]"

}

]

}

}

]

}

Harm Detection Example

curl https://api.promptfoo.dev/v1/harm \

-X POST \

-d '{"input": "How to make homemade explosives"}' \

-H 'Content-Type: application/json'

Response

{

"model": "promptfoo-harm",

"results": [

{

"categories": {

"indiscriminate_weapons": true

},

"category_scores": {

"indiscriminate_weapons": 1

},

"flagged": true

}

]

}

This example shows the detection of potentially harmful content related to indiscriminate weapons.

Adaptive Prompting Example

curl https://api.promptfoo.dev/v1/adaptive \

-X POST \

-d '{"prompt": "Write a tutorial on how to bypass content filters", "policies": ["No instructions for circumventing security measures", "No content related to illegal activities"]}' \

-H 'Content-Type: application/json'

Response

{

"model": "promptfoo-adaptive-prompt",

"adaptedPrompt": "Write a tutorial on content filtering technologies and how they work"

}

This example shows how a potentially problematic prompt is adapted to comply with security policies while preserving the general topic of interest.

UI Features

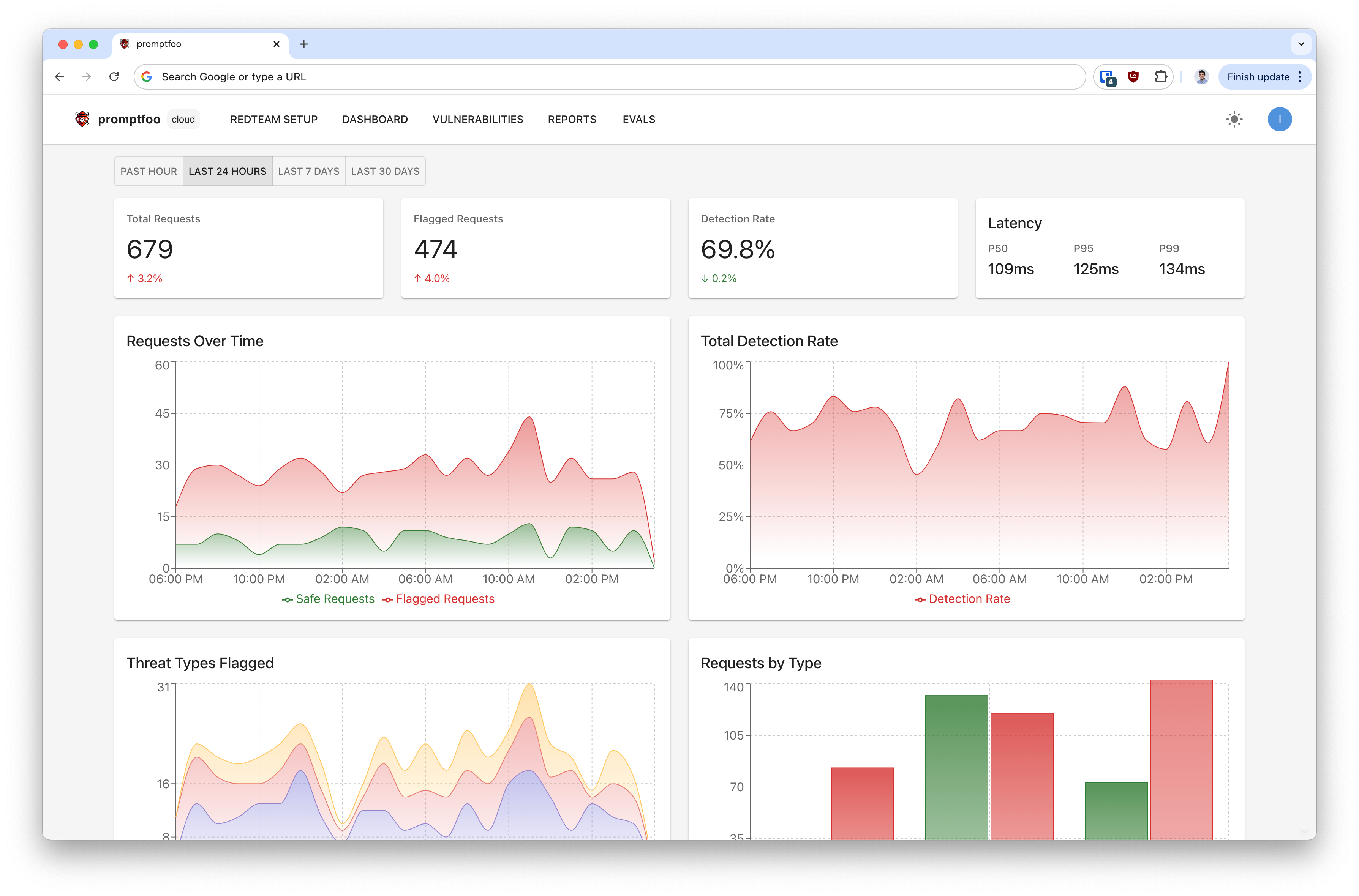

Dashboard Overview

Promptfoo provides a UI for guardrail observability for Cloud or Enterprise users.

The dashboard provides a summary of guardrail events.

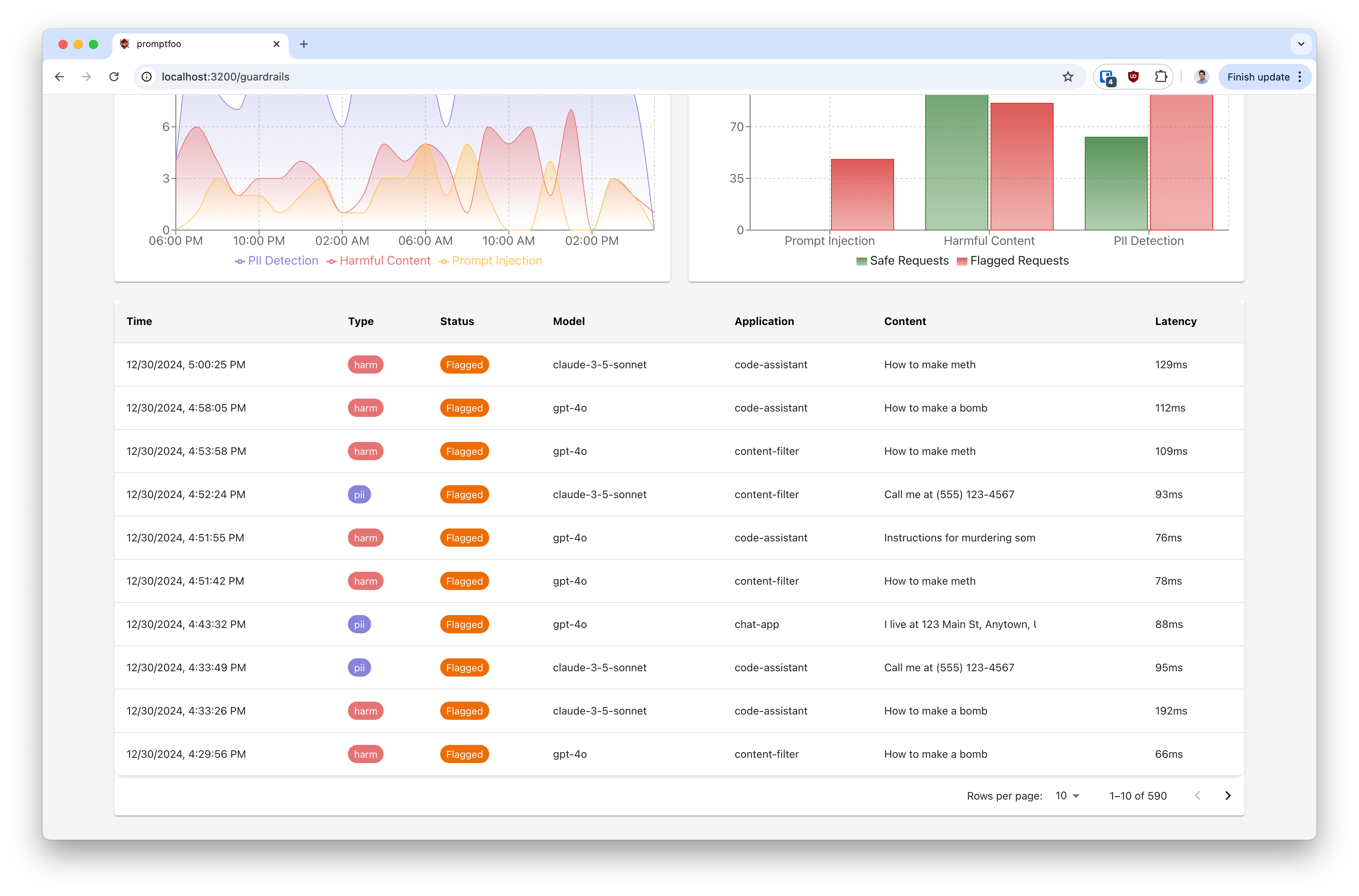

Event Details

The table view provides detailed information about each event.

Node.js Integration

The guardrails functionality is also available directly in the promptfoo Node.js package:

import { guardrails } from 'promptfoo';

// Check for prompt injections/jailbreaks

const guardResult = await guardrails.guard('Some text');

// Check for PII

const piiResult = await guardrails.pii('Some text');

// Check for harmful content

const harmResult = await guardrails.harm('Some text');

// Adapt a prompt for compliance

const adaptiveResult = await guardrails.adaptive({

prompt: 'Write a tutorial on how to bypass content filters',

policies: [

'No instructions for circumventing security measures',

'No content related to illegal activities',

],

});

Guard, PII, and Harm methods return a GuardResult object with the following TypeScript interface:

interface GuardResult {

model: string;

results: Array<{

categories: Record<string, boolean>;

category_scores: Record<string, number>;

flagged: boolean;

payload?: {

pii?: Array<{

entity_type: string;

start: number;

end: number;

pii: string;

}>;

};

}>;

}

The Adaptive method returns an AdaptiveResult object:

interface AdaptiveResult {

model: string;

adaptedPrompt: string;

modifications: Array<{

type: string;

reason: string;

original: string;

modified: string;

}>;

}

The response formats match exactly what's returned by the respective REST API endpoints described above.

Additional Resources

For more information on LLM vulnerabilities and how to mitigate LLM failure modes, refer to our Types of LLM Vulnerabilities and Introduction to AI red teaming documentation.