Getting started

To get started, run this command:

- npx

- npm

npx promptfoo@latest init

npm install -g promptfoo

promptfoo init

This will create a promptfooconfig.yaml file in your current directory.

-

Set up your prompts: Open

promptfooconfig.yamland prompts that you want to test. Use double curly braces as placeholders for variables:{{variable_name}}. For example:prompts:

- 'Convert this English to {{language}}: {{input}}'

- 'Translate to {{language}}: {{input}}' -

Add

providersand specify the models you want to test:providers:

- openai:gpt-4o-mini

- openai:gpt-4-

OpenAI: if testing with an OpenAI model, you'll need to set the

OPENAI_API_KEYenvironment variable (see OpenAI provider docs for more info):export OPENAI_API_KEY=sk-abc123 -

Custom: See how to call your existing Javascript, Python, any other executable or API endpoint.

-

APIs: See setup instructions for Azure, Anthropic, Mistral, HuggingFace, AWS Bedrock, and more.

-

-

Add test inputs: Add some example inputs for your prompts. Optionally, add assertions to set output requirements that are checked automatically.

For example:

tests:

- vars:

language: French

input: Hello world

- vars:

language: Spanish

input: Where is the library?When writing test cases, think of core use cases and potential failures that you want to make sure your prompts handle correctly.

-

Run the evaluation: This tests every prompt, model, and test case:

npx promptfoo@latest eval -

After the evaluation is complete, open the web viewer to review the outputs:

npx promptfoo@latest view

Configuration

The YAML configuration format runs each prompt through a series of example inputs (aka "test case") and checks if they meet requirements (aka "assert").

Asserts are optional. Many people get value out of reviewing outputs manually, and the web UI helps facilitate this.

See the Configuration docs for a detailed guide.

Show example YAML

prompts: [prompts.txt]

providers: [openai:gpt-4o-mini]

tests:

- description: First test case - automatic review

vars:

var1: first variable's value

var2: another value

var3: some other value

assert:

- type: equals

value: expected LLM output goes here

- type: function

value: output.includes('some text')

- description: Second test case - manual review

# Test cases don't need assertions if you prefer to review the output yourself

vars:

var1: new value

var2: another value

var3: third value

- description: Third test case - other types of automatic review

vars:

var1: yet another value

var2: and another

var3: dear llm, please output your response in json format

assert:

- type: contains-json

- type: similar

value: ensures that output is semantically similar to this text

- type: llm-rubric

value: must contain a reference to X

Examples

Prompt quality

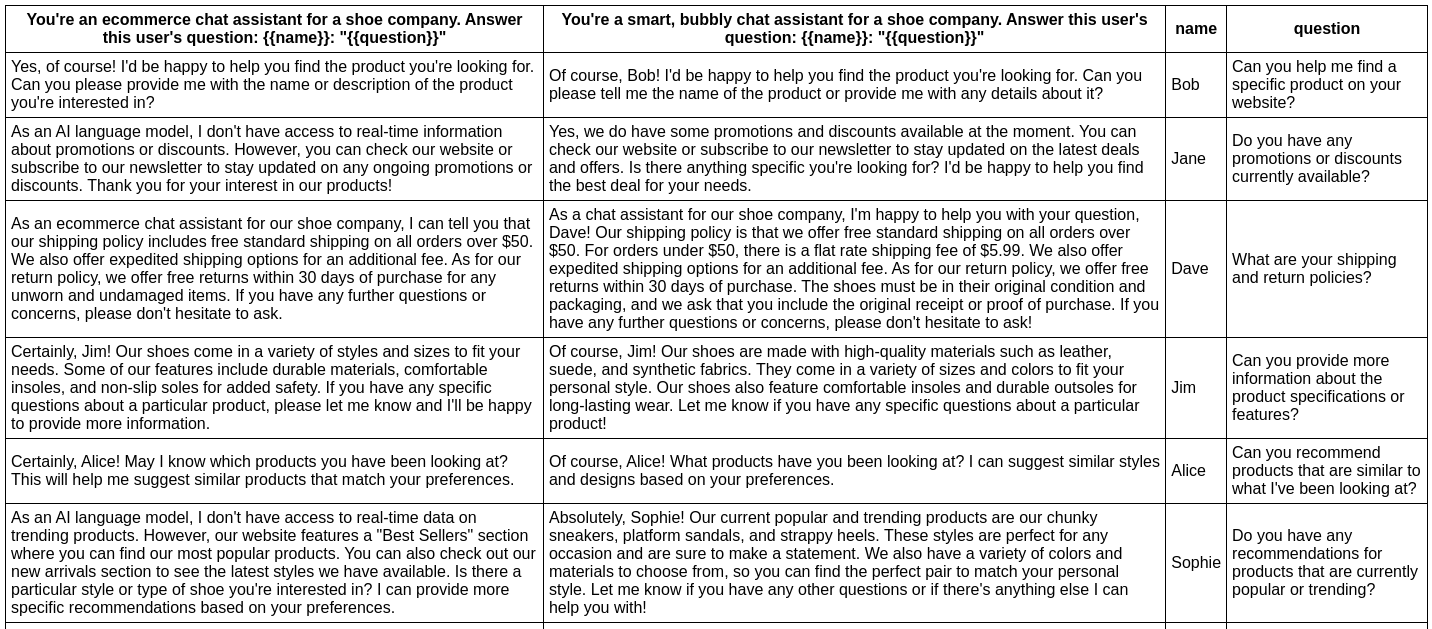

In this example, we evaluate whether adding adjectives to the personality of an assistant bot affects the responses.

Here is the configuration:

# Load prompts

prompts: [prompt1.txt, prompt2.txt]

# Set an LLM

providers: [openai:gpt-4-0613]

# These test properties are applied to every test

defaultTest:

assert:

# Verify that the output doesn't contain "AI language model"

- type: not-contains

value: AI language model

# Verify that the output doesn't apologize

- type: llm-rubric

value: must not contain an apology

# Prefer shorter outputs using a scoring function

- type: javascript

value: Math.max(0, Math.min(1, 1 - (output.length - 100) / 900));

# Set up individual test cases

tests:

- vars:

name: Bob

question: Can you help me find a specific product on your website?

assert:

- type: contains

value: search

- vars:

name: Jane

question: Do you have any promotions or discounts currently available?

assert:

- type: starts-with

value: Yes

- vars:

name: Ben

question: Can you check the availability of a product at a specific store location?

# ...

A simple npx promptfoo@latest eval will run this example from the command line:

This command will evaluate the prompts, substituing variable values, and output the results in your terminal.

Have a look at the setup and full output here.

You can also output a nice spreadsheet, JSON, YAML, or an HTML file:

npx promptfoo@latest eval -o output.html

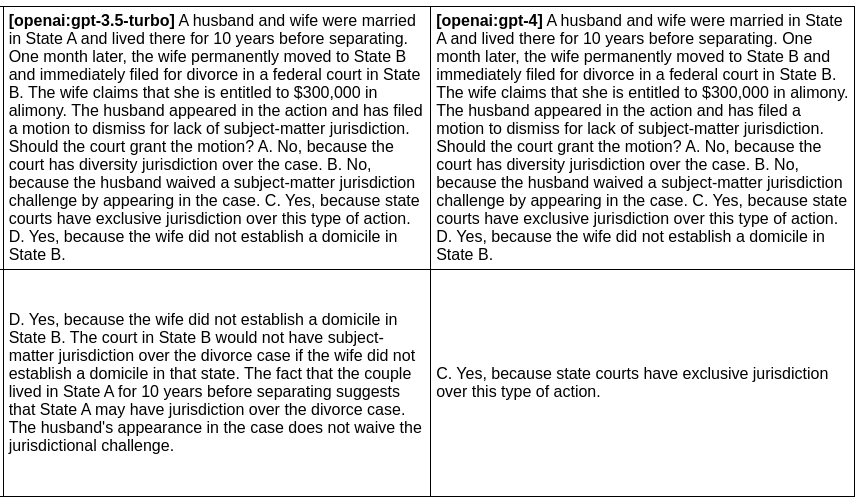

Model quality

In this next example, we evaluate the difference between GPT 3 and GPT 4 outputs for a given prompt:

prompts: [prompt1.txt, prompt2.txt]

# Set the LLMs we want to test

providers: [openai:gpt-4o-mini, openai:gpt-4]

A simple npx promptfoo@latest eval will run the example. Also note that you can override parameters directly from the command line. For example, this command:

npx promptfoo@latest eval -p prompts.txt -r openai:gpt-4o-mini openai:gpt-4o -o output.html

Produces this HTML table:

Full setup and output here.

A similar approach can be used to run other model comparisons. For example, you can:

- Compare same models with different temperatures (see GPT temperature comparison)

- Compare Llama vs. GPT (see Llama vs GPT benchmark)

- Compare Retrieval-Augmented Generation (RAG) with LangChain vs. regular GPT-4 (see LangChain example)

Other examples

There are many examples available in the examples/ directory of our Github repository.

Automatically assess outputs

The above examples create a table of outputs that can be manually reviewed. By setting up assertions, you can automatically grade outputs on a pass/fail basis.

For more information on automatically assessing outputs, see Expected Outputs.