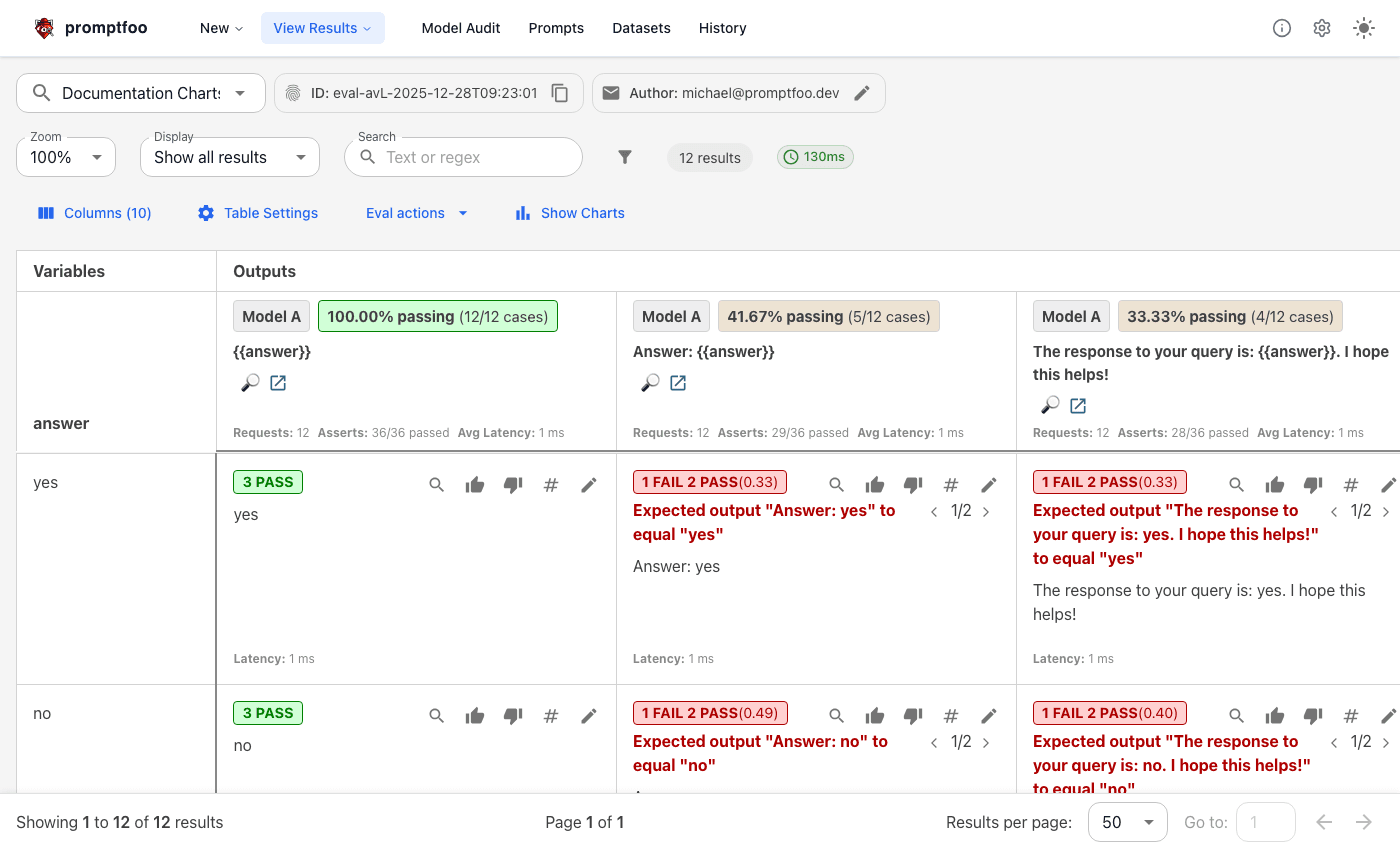

Using the web viewer

After running an eval, view results in your browser:

npx promptfoo@latest view

See promptfoo view for CLI options.

Keyboard Shortcuts

| Shortcut | Action |

|---|---|

Ctrl+K / Cmd+K | Open eval selector |

Esc | Clear search |

Shift (hold) | Show extra cell actions |

Toolbar

- Eval selector - Switch between evals

- Zoom - Scale columns (50%-200%)

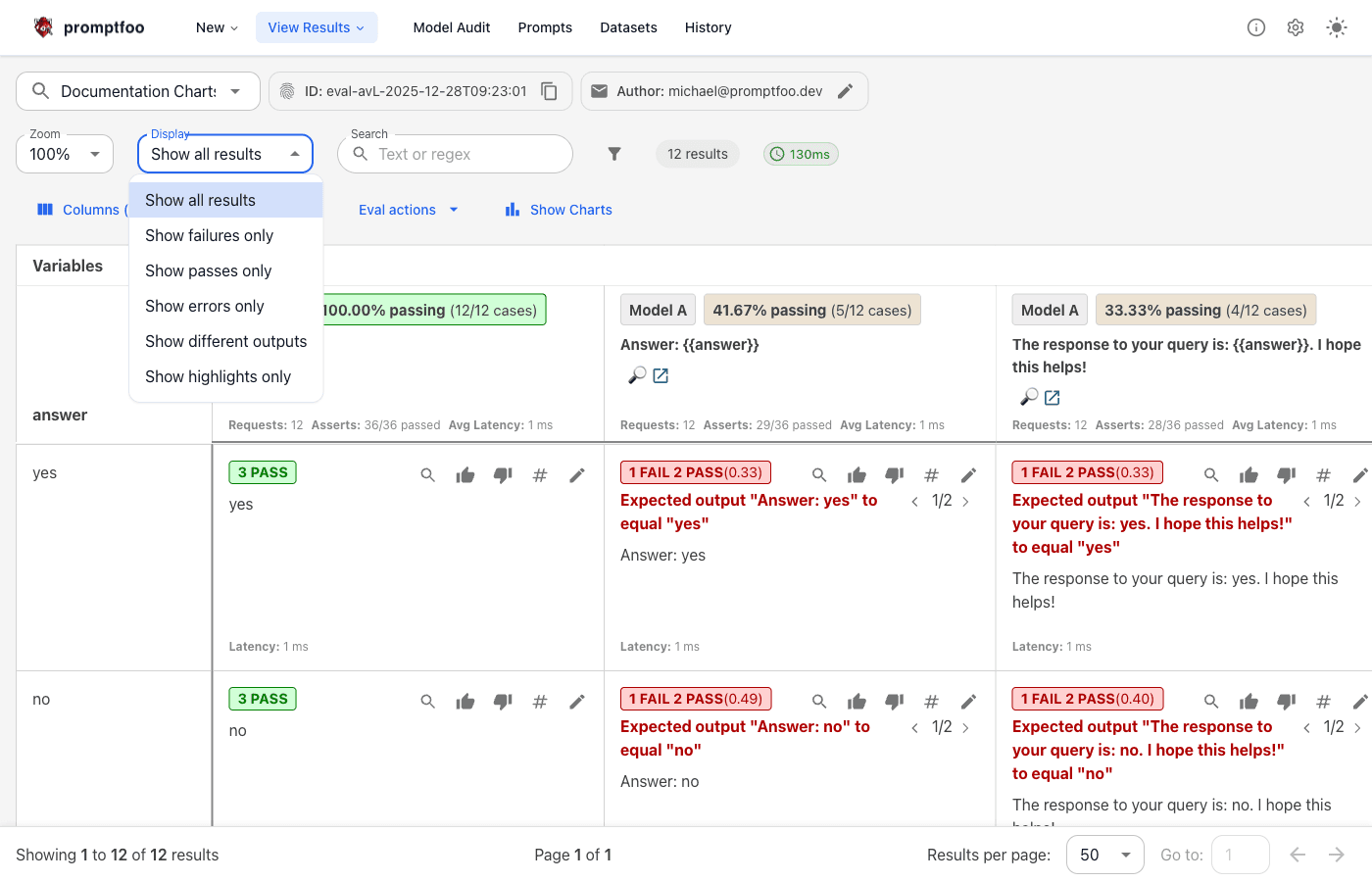

- Display mode - Filter: All, Failures, Passes, Errors, Different, Highlights

- Search - Text or regex

- Filters - By metrics, metadata, pass/fail. Operators:

=,contains,>,<

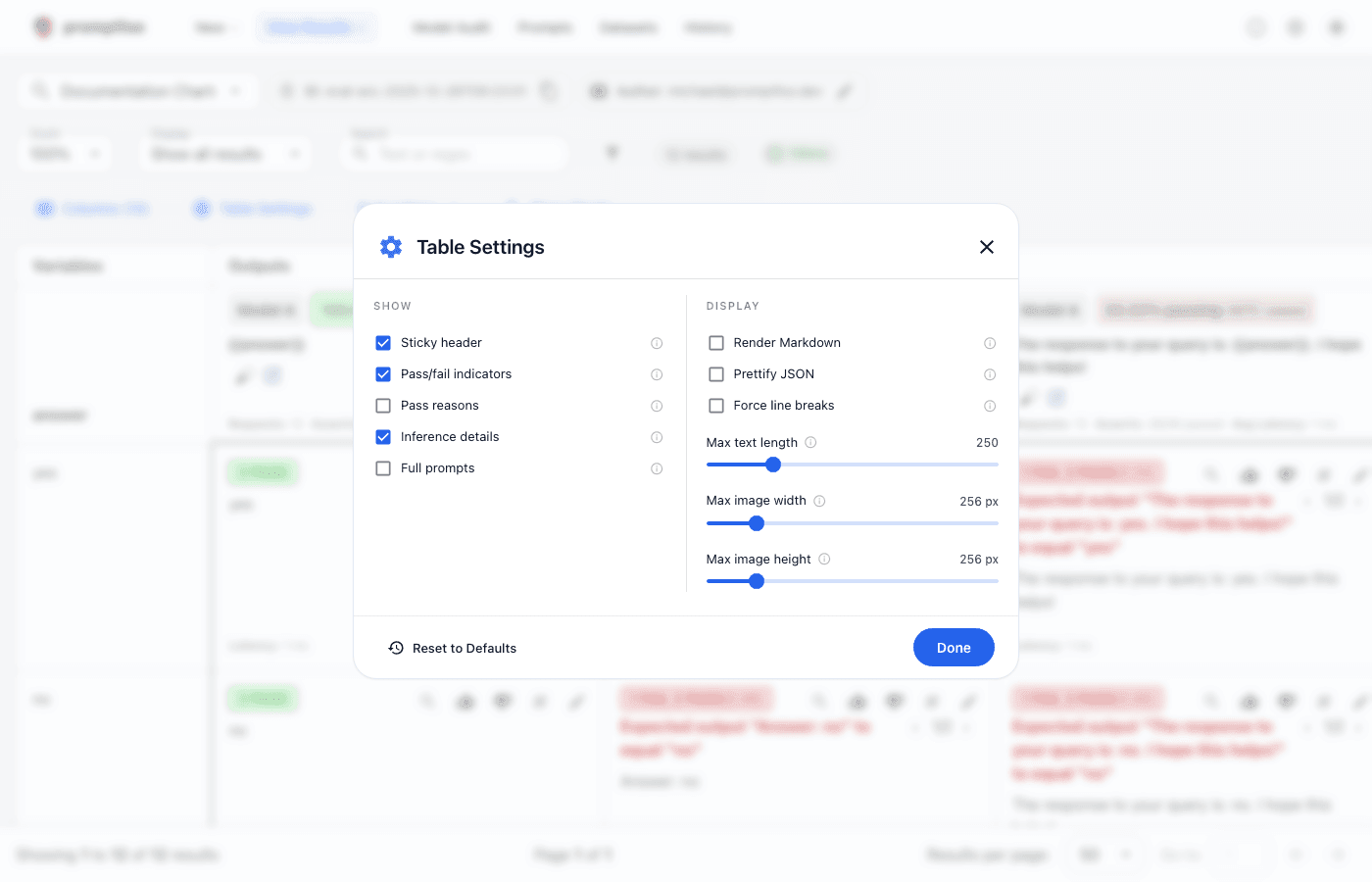

Table Settings

- Columns - Toggle variable and prompt visibility

- Truncation - Max text length, word wrap

- Rendering - Markdown, JSON prettification

- Inference details - Tokens, latency, cost, tokens/sec

- Media - Image size limits; double-click for lightbox

Cell Actions

Hover to reveal actions. Hold Shift for more:

| Action | Description | |

|---|---|---|

| 🔍 | Details | Full output, prompt, variables, grading results |

| 👍 | Pass | Mark as passed (score = 1.0) |

| 👎 | Fail | Mark as failed (score = 0.0) |

| 🔢 | Score | Set custom score (0-1) |

| ✏️ | Comment | Add notes |

| ⭐ | Highlight | Mark for review (Shift) |

| 📋 | Copy | Copy to clipboard (Shift) |

| 🔗 | Share | Link to this output (Shift) |

Ratings and comments persist and are included in exports—use them to build training datasets.

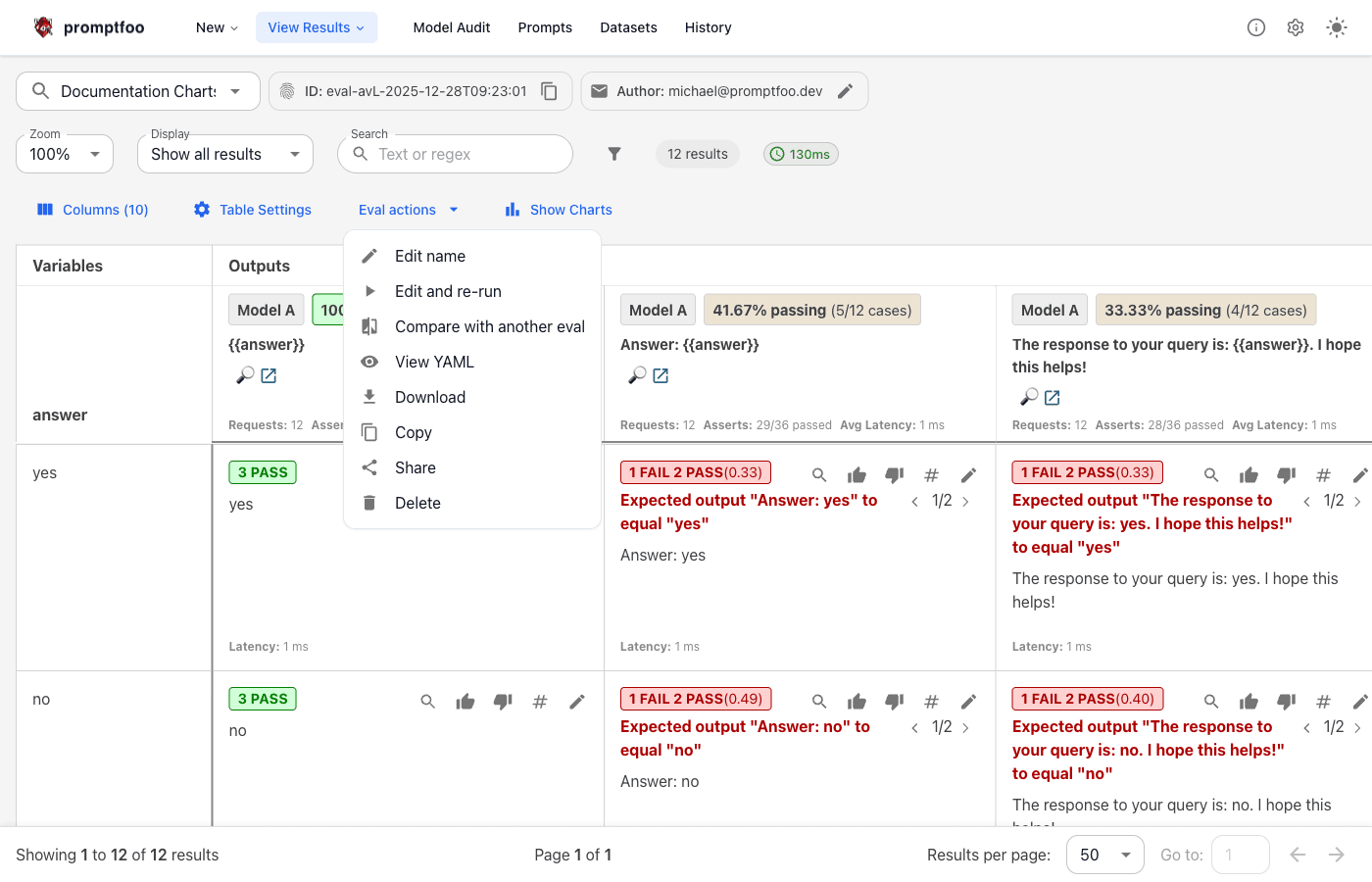

Eval Actions

- Edit name - Rename eval

- Edit and re-run - Open in eval creator

- Compare - Diff against another eval (green = added, red = removed)

- View YAML - Show config

- Download - Opens export dialog:

| Export | Use case |

|---|---|

| YAML config | Re-run the eval |

| Failed tests only | Debug failures |

| CSV / JSON | Analysis, reporting |

| DPO JSON | Preference training data |

| Human Eval YAML | Human labeling workflows |

| Burp payloads | Security testing (red team only) |

- Copy - Duplicate eval

- Share - Generate URL (see Sharing)

- Delete

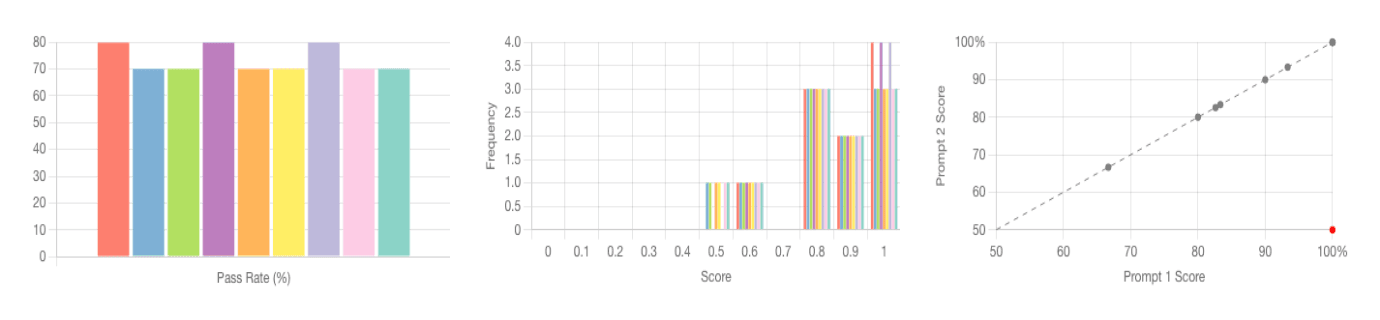

Results Charts

Toggle with Show Charts.

Pass Rate

Percentage of tests where all assertions passed.

Score Distribution

Histogram of scores per prompt. Each test score = mean of its assertion scores. See weighted assertions.

Scatter Plot

Compare two prompts head-to-head. Click to select prompts.

- Green = Prompt 2 scored higher

- Red = Prompt 1 scored higher

- Gray = Same score

Sharing

Eval actions → Share generates a URL.

Cloud

Free at promptfoo.app. Links are private to your organization.

promptfoo auth login -k YOUR_API_KEY

promptfoo share

Self-hosted

promptfooconfig.yaml

sharing:

apiBaseUrl: http://your-server:3000

appBaseUrl: http://your-server:3000

Or set via API Settings in the top-right menu. See sharing docs for auth and CI/CD.

URL Parameters

Viewer state syncs to the URL—bookmark or share filtered views:

| Parameter | Values |

|---|---|

filterMode | all, failures, passes, errors, different, highlights |

search | Any text |

/eval/abc123?filterMode=failures&search=timeout